Author: Paul J Bruemmer

The Future of SEO in the Age of Generative AI

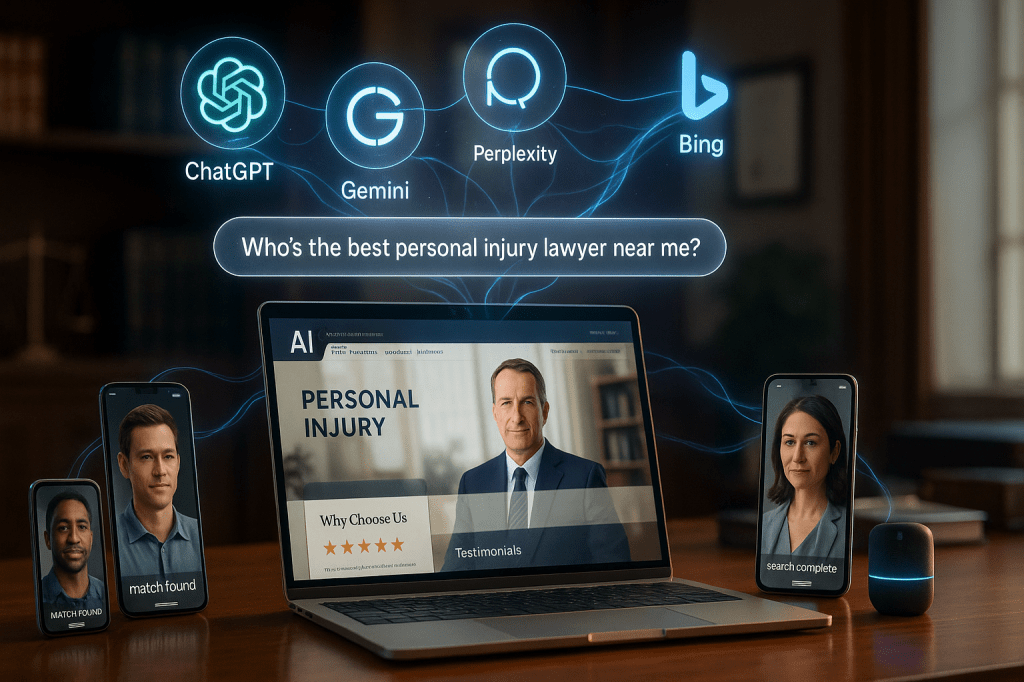

Search Engine Optimization is rapidly evolving beyond blue links and keyword matching. With the rise of generative AI systems like ChatGPT, Perplexity, Gemini, and Claude, a new paradigm has emerged—LLMO (Large Language Model Optimization) and GEO (Generative Engine Optimization).

These new disciplines revolve around optimizing not for traditional SERPs but for answers generated by AI. To stay ahead, SEOs must now understand how AI models retrieve, ground, and generate information—and how to ensure their content is cited, surfaced, and trusted.

How to Optimize Content for LLMO?

LLMO focuses on being the source large language models cite or paraphrase when responding to prompts.

Key Tactics:

- Entity-Centric Optimization: Use structured data to clearly define entities (people, organizations, legal cases, services) on your site. This improves alignment with knowledge graphs used by LLMs.

- Semantic Depth > Surface Keywords: Generative models prefer content with conceptual richness and topic completeness. Cover why, how, and what-if angles to become the “best” answer.

- Citeable, Fact-Based Writing: Use bullet points, dates, citations, and named references—AI loves clarity and verifiability.

- Use Natural Q&A Patterns: Include FAQ sections and conversational content to better match user queries as interpreted by generative models.

- Source Clean Signals: Publish under author profiles with known expertise, link to reputable sources, and maintain a strong off-site reputation (PR, backlinks, mentions).

How Are Authoritative Sources Chosen for Grounding?

Grounding is the process by which a generative AI system uses real-world data to validate its outputs. It often happens during RAG workflows (Retrieval-Augmented Generation).

How sources are selected:

- Semantic Proximity: The content must be highly relevant to the prompt in meaning—not just keyword overlap.

- Reputation & Authority: Search engines (and AI pipelines using them) filter by E-E-A-T signals: Expertise, Experience, Authority, and Trust.

- Link Graph & Citations: Content that is heavily cited, internally consistent, and well-linked is more likely to be used.

- Structured Knowledge Inclusion: JSON-LD, Schema.org, and proper meta-data boost retrievability and classification confidence.

What Role Do Search Engines and Knowledge Graphs Play in RAG?

In Retrieval-Augmented Generation (RAG), the AI model queries an external data source—often a vector search layer or traditional search engine—before answering.

Search engines (like Bing, Google, Brave Search) and knowledge graphs play roles as:

- Indexers: Providing a filtered pool of authoritative, up-to-date data.

- Rerankers: Evaluating retrieved content based on relevance and authority.

- Signal Sources: Feeding structured and unstructured data into model embeddings to help in grounding responses.

Knowledge graphs, in particular, help connect entities and concepts so that the model understands relationships (e.g., Paul Bruemmer → Jury Analyst → Pretrial Research Tools).

How RAG Works (Retrieval-Augmented Generation)

RAG is a hybrid AI architecture that improves factual accuracy by retrieving real-world documents before generating answers.

Steps:

- Query Understanding: User prompt is vectorized and semantically mapped.

- Document Retrieval: A retrieval engine (e.g., ElasticSearch, Pinecone, Google Search API) pulls top-k relevant docs.

- Contextual Ingestion: Retrieved texts are passed into the prompt context of the LLM.

- Answer Generation: The LLM uses this grounded context to generate a more accurate, specific, and up-to-date response.

The output is a fusion of LLM creativity + real-world verification.

How Grounding Works

Grounding ensures that the model’s output is tied to verifiable and factual information.

There are two main types:

- Explicit Grounding: The model cites documents directly during response generation (e.g., Perplexity.ai).

- Implicit Grounding: The model adjusts its answer internally based on contextual data, without citation.

Grounding boosts:

- Trustworthiness

- Regulatory compliance (important in legal, health, finance)

- Searchability (your content becomes part of the AI’s knowledge base)

SEOs can influence grounding by:

- Publishing referenceable, semantically rich documents.

- Using clear document structure (H2s, lists, tables).

- Getting cited by trusted third-party platforms (news, .gov, .edu).

💡 Final Takeaway for SEOs

To win visibility in the era of generative search and AI assistants:

- Think beyond keywords.

- Think in answers.

- Think in relationships between entities.

- Think in citations, facts, and grounded data.

Your website is no longer just a destination—it’s a source. Optimize it like one.

Here’s an additional section that covers Google’s patents related to RAG and grounding systems, in a concise and SEO-relevant way:

Google Patents on RAG & Grounding: What SEOs Should Know

Google has filed multiple patents that reveal how retrieval and grounding may be implemented in AI-driven search and generative systems. These give us a blueprint for how to optimize content for future LLM-based rankings and answer generation.

Key Patents & Concepts:

- US11429762B1 – “Selecting and Presenting Answer Passages From Documents”

- Focuses on how multiple sources are evaluated and ranked for quality before being passed into an answer-generation system.

- SEO Insight: Google may use quality thresholds and semantic relevance to determine what content gets included in LLM prompts. Make your content chunkable, coherent, and answer-focused.

- Focuses on how multiple sources are evaluated and ranked for quality before being passed into an answer-generation system.

- US20220355515A1 – “Training Data Generation for Language Models Using Knowledge Graph Grounding”

- This patent describes how structured knowledge from graphs (like Google’s Knowledge Graph) is used to enhance training and grounding in LLMs.

- SEO Insight: Content linked to entities in Google’s KG (via schema, wikidata IDs, etc.) is more likely to be surfaced and trusted by AI systems.

- This patent describes how structured knowledge from graphs (like Google’s Knowledge Graph) is used to enhance training and grounding in LLMs.

- US11385987B2 – “Providing Factual Responses to Queries”

- Discusses using external factual sources to ground generative outputs and ensure accuracy.

- SEO Insight: Factual, timestamped, and well-sourced content will rank higher in AI-derived answers. Legal, medical, and financial SEOs should especially focus on factual grounding.

- Discusses using external factual sources to ground generative outputs and ensure accuracy.

- US11195479B2 – “Natural Language Processing System with Contextual Grounding”

- Describes integrating search-based context into LLMs to improve answer relevance and reduce hallucinations.

- SEO Insight: Context-rich, semantically optimized clusters (supporting content, pillar pages) help the AI better ground your pages.

- Describes integrating search-based context into LLMs to improve answer relevance and reduce hallucinations.

Takeaway for SEOs:

- These patents confirm Google is combining search retrieval + knowledge graph + LLMs.

- SEOs must optimize not just for rankings, but for retrievability, semantic richness, and entity association to get cited by next-gen AI systems.

Want help mapping your site content to entities and grounding signals? I can assist.

Leave a comment